Communication Across Worldviews With Sociotechnical Systems

by Brian Rayburn

posted Jan 22, 2024

Conflict in Today's World

To make a decision that can intentionally and effectively impact the world around us requires understanding the complexity of this world from multiple perspectives or worldviews. This idea that we can function better as a society with diversity of worldviews is called pluralism, an idea that I believe is a necessary fundamental property of a society ready to take on current and future challenges. While the idea is simple, having a diversity of worldviews in and of itself does not mean a group has the ability to make decisions intentionally and effectively and can result in conflict with the potential to degrade the ability of the group to make decisions at all.

Sound familiar? If you live in the US, you've seen the government's decreasing ability to make decisions that seem compatible with the worldviews of large segments of the population. You've seen social groups in conflict, whether they are of different political parties, cultural backgrounds, or religious worldviews.

As a citizen of the world, we have seen the same lack of ability to coordinate across groups as a wave of nationalistic political movements has led countries to protectively look out for their own survival while decreasing the ability to coordinate internationally. Or was it the other way around, that the inability to coordinate to solve our global problems has led to an environment where groups feel the need to protect their own local interests in the presence of a chaotic global sociopolitical climate? Or maybe a feedback loop where one of these drives the other which further drives the former? For the purpose of this post, I'm not going to worry about this distinction, and focus on ways to increase our ability to coordinate so that perhaps we can adapt to the increasing global tensions.

The conflicts we're facing will ultimately be resolved, the question is how do we transition to a place of stability across these divides while minimizing the violence and suffering (physical, mental, and emotional) in the processes.

Decision Making - OODA Loops and Worldviews

Let us talk a bit about how decisions get made in general.

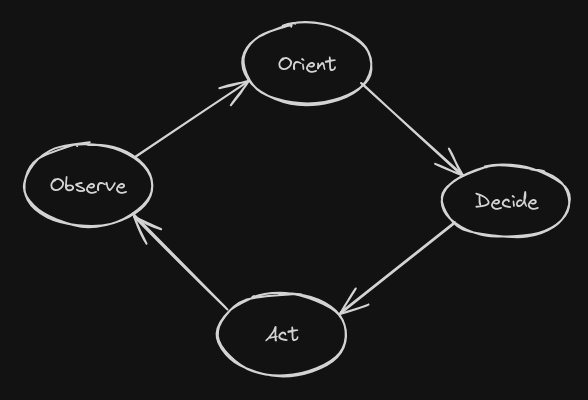

First I want to share a framework called the OODA Loop, designed as a way to think about making decisions quickly in combat scenarios by United States Air Force Colonel John Boyd. Since its inception, it has been applied to everything from corporate America, to litigation in the court systems.

By viewing the process as a cycle, we can see how the information we have and therefore how we understand the world highly depends on the previous experiences and decisions we have encountered and the actions we undertook previously. Applied to groups, you can see how if everyone involved in a decision has similar experiences in the past, each person is going to have only marginal contributions to the group's ability to make decisions effectively by contributing little to the orientation stage of the decision making process.

But then why don't groups always seek out a diverse a set of people with disparate worldviews to increase the group's ability to survive and thrive?

To be able to synthesize a group orientation from those different worldviews, you need to understand how to combine them, which to means understanding something about how those worldviews are related to each other.

A Challenge of Diversity

There's another tricky bit here: Because another person's worldview may initially seem incompatible with our own, we sometimes try to assert our notion of truth by finding problems with the other's worldview and declaring "Look at the ways in which this other's worldview is wrong!"

Here's the thing: Our worldview is wrong too, or at the very least incomplete in a way which brings us well short of being able to determine some grand notion of what is absolute truth. But we need our worldviews because we need to make decisions in the world. We need to go through our lives doing the things we need to survive, interact with other humans, and help build society whether through intention or accident. Otherwise our worldview will cease to be, and those that survive's worldview will remain. Ok, so we need our worldviews, and we need to coordinate with other people and their worldviews. So what can we do about it?

Something(s) We Can Do About It

I've been thinking a lot about how we could overcome the tendency to get defensive of our worldviews in a way that reduces harm for those we're interacting with. It's a tricky problem, but think I've found a few ways to start approaching this problem. I hope to continue exploring potential solutions to this problem and get the chance to validate these designs and more in the future.

AI Non-Violent Communication Moderator

First looking at how to use an approach in psychology to reducing harm in communication: Non-Violent Communication (NVC). Here's a link to The Book on NVC. Here is a very short summary of NVC from ChatGPT (fact-checked for accuracy):

Non-violent Communication (NVC), developed by Marshall Rosenberg, emphasizes empathy and clarity in interactions. It involves four key steps: objectively observing situations without judgment, expressing feelings, identifying underlying needs, and making specific, non-demanding requests. This approach fosters deeper understanding and connection by focusing on mutual human values and needs, rather than on persuading or winning. NVC is effective in enhancing personal and professional relationships, promoting honesty, empathy, and compassionate resolution of conflicts.

Thanks ChatGPT!

See the relationship between this framework and the problems above?

So here's a scenario involving a sociotechnical system that might leverage this framework at scale:

- Person A and Person B need to make a decision together, but first they need to orient around the problem and understanding of the context in which the problem is being made.

- Person A starts out by making a statement about the context such as "All glorps are gluewiggly." I don't know what glorps or gluewiggly are but it doesn't really matter.

- Before the previous statement gets sent to Person B, an AI knowledgable in NVC checks to see if the statement is consistent with NVC principles. Let's say for now that it is, so the message gets delivered to Person B.

- Person B does not agree with this statement and says "That is wrong!" which is definitely judgmental of Person A's statement and does not really add any new information the group can use to try to reach alignment.

- This message gets passed to our trusty AI NVC moderator which says "That statement does not follow NVC principles because .... Perhaps clarifying what you each mean by glorps and gluewiggly directly would let the conversation progress more peacefully. Additionally you may want to express your emotions directly in words so User A does not perceive them as a threat."

At this point I need to say that I am not a psychologist and whatever the AI moderator would say should be determined by someone with formal training in NVC, but I'm hoping the general idea is being communicated here. Let's continue:

- User B reframes his statement to "When I hear you says that 'All glorps are gluewiggly' I feel distraught since my Grandfather was a glorp and not gluewiggly. Maybe we aren't using the same definition for 'gluewiggly.' Can you share with me what you mean by that word?"

- Assuming these meets the principles of NVC, this message makes it past our AI NVC Moderator and get delivered to User A

Ok, so that's an idea, probably short of a full solution to the problems we described as there are many cases where there may be great nuance in determining if a statement was consistent with NVC principles, much less in providing helpful feedback to aid the user adjust their communication. We have to start somewhere though. I think this approach is ripe for further investigation.

Knowledge Graph Based Worldview Relation Modeling

Another approach would be to use AI to translate Person A's worldview as they're describing it into a knowledge graph. Person B could do the same. For them to build understanding across these worldviews they would each be able to ask questions, which an AI would answer from the knowledge graph if the information was contained or ask the other person for the information needed to extend their knowledge graph and complete the answer. Through this process mutual understanding both of the other person's perspective as well as its relationship to one's own worldview can be gathered without unintentionally communicating emotions through tone and secondary meanings.

A critique of this approach I've considered is that normalizing text in this way can feel like it is stripping the speaker's communication of its humanity by sanitizing the aspects of language that are not explicitly stated. My guess (under further research) is that this creates a tradeoff that must be understood in the context of the conversation, especially its purpose. There may be times where it is critical for people to work together to create an understanding of aspects of each other's worldviews and any additional information in the communication can be lost in the aim of serving this goal. There are also problem situations where this type of interaction could further the divides between the people involved by making empathy more challenging through hiding the human element from the person on the other end of the conversation.

Final Note

Understanding when a solution is appropriate as a tool to address the problems described at the beginning of this post requires better understanding the variety of conditions under which these social problems arise and what the goals and needs of the participants are within the context of the decision being made. I can only hope that further exploration will reveal if these or other such approaches would manage to alleviate the harms caused by trying to make decisions together without understanding and empathy.